The Raspberry Pi is a small ARM11 based board, (ARM1176JZF-S with ARMv6 instruction set), with 100 Mbit Ethernet port, HDMI, analog video, GPIO pins, SPI, I²C, UART and two USB interfaces. The processor is identical to the first genation Apple iPhone CPU.

- What you get – no SD card included

The Raspberry Pi just costs about 25-30 $ and was designed for educational use in mind. You can order the device in GB for 34 € with shipping to Germany and a T-shirt included. Because of the low power consumption (3,5 watt, fan less, no heat sink required) , its tiny size (credit card form factor) and the low price the Raspberry Pi is an ideal device for developing energy efficient solutions like NAS, routers and media centers. The Raspberry Pi uses am SD card as mass storage device, which can be deployed whith a proper Linux distribution like Raspbian “wheezy”, a modified Debian. The distribution consist out of a modern Linux with a resource optimized desktop and a slim Webkit based browser (Midori). Regardless the Raspberry was not designed for such a use case, it is fast enough to surf the Internet!

- T-shirt inclusive, the Raspberry Pi

The custom Linux distribution Raspbian “wheezy” is strongly recommended because of the hardware floating point support for the ARM11 processor. The Debian ARM “arml” distribution (ARMv4t, ARMv5 and ARMv6 devices) version is lacks support for the hardware floating point capabilities of the Arm11 (ARMv6). The Debian “armhf” and the Ubuntu ARM distributions are supporting only ARMv7 instruction set devices (minimum ARM Cortex A8).

To be sure to make use of hardware floating point set the following compiler options:

„ -mcpu=arm1176jzf-s -mfpu=vfp -mfloat-abi=hard“

Otherwise the floating point operations will be emulated in software, which is approximately 10 times slower.

ARM processor guide and Android Tablet buying hints

ARM processors, or on a Chip integrated ARM cores (System on a chip, SoC), driving mostly all current Android smartphones and tablets. The Apple-A5 SoC in the iPhone and iPad are also based on ARM cores. Apart of the processor for the user environment (e.g. Android or IOS) there are several other ARM cores integrated in a modern smartphone. Smaller energy efficient ARM cores are used for instance in the radio device of the phone, which executes all the GSM/UMTS/2G/3G communication tasks. Most of today’s Bluetooth and GPS chip sets contain a ARM core too. Likely your smantphone is equipped with four or more integrated ARM cores in different chip sets. The naming convention of the instruction sets of ARM processors should not be confused with naming of the ARM architecture (see http://en.wikipedia.org/wiki/ARM_architecture). Also the custom identification of ‘system on a chip’ (SoC) from different manufactures does not correspond to the standard ARM architecture naming conventions. This site gives some hints which ARM core is integrated in different SoC of common vendors. The site is very useful to compare the performance of common SoC which are integrated in Android tablets. To give you a hint: Don’t buy a device with a ARM core less than Cortex A8. Tablets with an ARM Cortex A8 CPU are available nowadays for ~ 100 €. But expect an iPad 3 like performance form a device with at least ARM Cortex A9 (ARMv7) with several cores.

- Desktop of Raspbian “wheezy” distribution, Midori web browser

MD5 hash collision

To test the computing performance of the Raspberry Pi ARM11 processor (ARMv6) I did not choose a standard benchmark but the MD5 Collision Demo from Peter Selinger.

It implements a algorithm to attack a MD5 hash to compute a collision. These collisions are useful to create a second (hacked) binary (or document) with an identical hash value of a original binary. The algorithm always starts with a random number to compute a hash collision. In case of more processes of the algorithm on more CPUs or cores with different random start values, the chance to find a collision increases. The algorithm itself is not paralleled, but profits from different random start values.

PC versus …

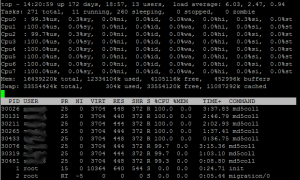

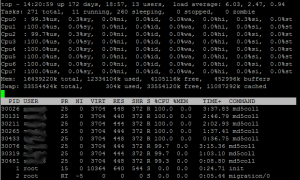

This first run was done on a single core Atom netbook (2 h 46 m). A 8 core Intel machine (two Xeon Quad Core processors) need only 16 minutes and 6 seconds to find a collision. Only one core found a collision after that time, the last core found a collision not before 3 hours. (8 times 100% CPU load,see pic. )

- tho top command (press ‘1’ to see all cores)

… CRAY versus ….

I have tested the Cray XT 6m supercomputer of the University of Duisburg-Essen in june 2010 with the same tasks before. I was limited to 300 of overall 4128 cores at that time. One of the cores found a hash value collision after 56 seconds. Oh the Cray it is easy to start such a job automatically on all available cores at a time.

- Cray supercomputer at the University of Duisburg Essen

… Raspberry PI

The small Raspberry Pi found a hash collision after 30 hours and 15 minutes. The algorithm is not a real benchmark, it is possible to get a disadvantageous random start value with bad luck. Two other runs ended each after 19 hours and 10 minutes and 29 hour and 28 minutes. But how the Rasberry Pi compares to the Cray in energy efficiency?

- Raspberry Pi – cheaper and not so noisy but slower than a Cray supercomputer. Surprisingly similar energy drain in relation to the computation power.

The two Cray Units at the University of Duisburg-Essen consume 40 kW each. To dissipate the heat the climatisation needs the same amount of engine power. Over all power consumption of the plant is about 160 KW. Relative to the 300 cores used in the experiment the power consumption is about 11.6 KW. In 56 seconds the plan uses 0.18 KWh of electrical energy. The Raspberry Pi power consumption is about 0,0035 KW, after 30,25 hours the energy usage is about 0.106 KWh. If I did not consider the energy for the climatisation, surprisingly the energy drain in relation to the computation power is more or less similar for both devices!