pagefile.sys forensics: Beware of Yara false positive due to Microsoft Defender artifacts

May 24th, 2022Since I do a lot of forensics, I discovered Andrea Fortuna’s site with a lot of useful information. However, in one case he is (now, I assume it depends on the Windows 10 version, I have used Win10 EDU 21H2 for my research) wrong: pagefile.sys forensics:

https://andreafortuna.org/2019/04/17/how-to-extract-forensic-artifacts-from-pagefile-sys/

Yara and a scan for URL-artifacts with strings lead you to false positives caused by Microsoft Defender memory artifacts, even on a freshly installed Windows:

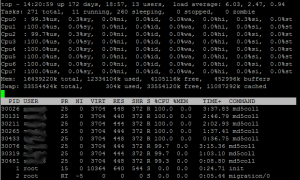

yara will find for me (fresh Windows 10 install, just after 5 minutes connected to the internet):

APT1_LIGHTBOLT pagefile.sys Tofu_Backdoor pagefile.sys APT9002Code pagefile.sys APT9002Strings pagefile.sys APT9002 pagefile.sys Cobalt_functions pagefile.sys NK_SSL_PROXY pagefile.sys Industroyer_Malware_1 pagefile.sys Industroyer_Malware_2 pagefile.sys malware_red_leaves_memory pagefile.sys GEN_PowerShell pagefile.sys SharedStrings pagefile.sys Trojan_W32_Gh0stMiancha_1_0_0 pagefile.sys spyeye pagefile.sys with_sqlite pagefile.sys MALW_trickbot_bankBot pagefile.sys XMRIG_Miner pagefile.sys Ursnif pagefile.sys easterjackpos pagefile.sys Bolonyokte pagefile.sys Cerberus pagefile.sys DarkComet_1 pagefile.sys xtreme_rat pagefile.sys xtremrat pagefile.sys

which is definitely false positive!

I have used the malware_index.yar from

wget https://github.com/Yara-Rules/rules/archive/refs/heads/master.zip

Even if the freshly installed Windows 10 is completely isolated from the Network, yara will find some artifacts:

APT1_LIGHTBOLT pagefile.sys GEN_PowerShell pagefile.sys with_sqlite pagefile.sys Bolonyokte pagefile.sys

The list of extracted URLs with the strings command

$ strings pagefile.sys | egrep "^https?://" | sort | uniq > url_findings.txt

will be detected itself as malware on Windows ;-). My assumption was that the origin of the malware artifact were malware signatures of the Windows Defender. To clarify this, I‘ve done some experiments with Windows 10 virtual machines under Linux.

Since Windows Defender is an integral part of Windows 10 and 11, it is not an easy task to remove Windows Defender completely from the fresh installation. All guides I have found didn‘t work with the current Windows 10 versions since 21H2 anymore. Finally, I have found a PowerShell script at Jeremy site bidouillesecurity.com:

https://bidouillesecurity.com/disable-windows-defender-in-powershell/

However, even with this script Windows update tries to download malware signatures, with will finally end up as artifacs in the pagefile.sys. Only if I fully block the internet access of the fresh created installation, no malware artifacts will appear in pagefile.sys. The prevention of Windows updates is not an easy task nowadays. Just setting ethernet to metered connection does not work anymore in current versions.

For my experiments, I have created virtual machines in VMWare Player, converted the VMDK images to raw format with qemu and grabbed the pagefile.sys out of the image for forensic investigation.

qemu-img convert -p -O raw /path/myWin10.vmdk vm.raw

The setup loop device

losetup /dev/loop100 -P vm.raw

Option P scan for Paritions, force loop device loop100 (-f shows the next free one but 100 should be always free)

Mount the image:

mount /dev/loop100p3 /mnt/image

Copy it:

cp /mnt/image/pagefile.sys .

Yara scan:

yara /home/bischoff/yara-rules/rules-master/malware_index.yar pagefile.sys > yara_neu.log

URL extraction:

strings pagefile.sys | egrep "^https?://" | sort | uniq > alle_urls.txt

To wipe the original pagefile.sys completely I have used

shred -uvz /mnt/image/pagefile.sys

Unmounting the image und detach the loop device:

umount /mnt/image losetup -d /dev/loop100

Convert the raw image back to a VMWare image:

qemu-img convert -p -O vmdk vm.raw /path/myWin10.vmdk

Even if you can‘t get a memory dump of an infected machine, a hyberfile.sys or a pagegefile.sys provides the forensics engineer with indirect information about memory content. But be warned of Defender artifact, which could lead you into false positive detections.